NVIDIA’s Blackwell GB200 NVL72 AI racks are making waves in AI performance tests, significantly surpassing AMD’s Instinct MI355X. In an MoE (Mixture of Experts) environment, NVIDIA’s offerings are setting new benchmarks, proving to be the superior choice.

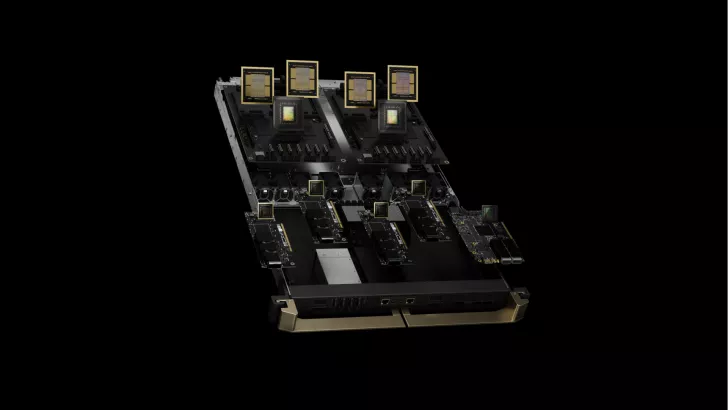

NVIDIA’s Strategic Advantage in MoE Architectures

As AI models increasingly pivot towards MoE-focused frameworks, the demand for efficient compute resource utilization becomes crucial. Unlike dense models, MoE configurations encounter substantial computing bottlenecks as they scale. This is primarily due to the extensive all-to-all communication required between nodes, which can lead to latency and bandwidth issues. Hyperscalers are thus on the hunt for the best performance-for-cost solutions, and reports suggest that NVIDIA’s GB200 NVL72 is emerging as the preferred option for MoE architectures.

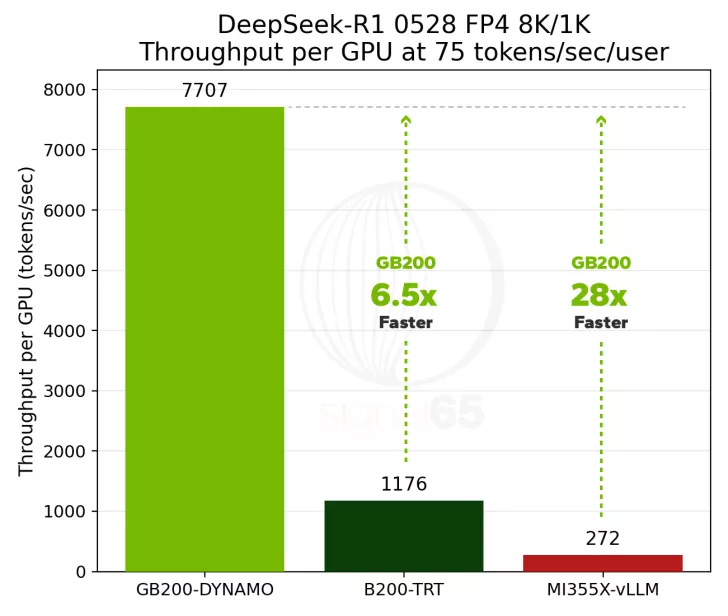

Performance Through the Roof with Co-Design

SemiAnalysis’s InferenceMAX benchmarks reveal that NVIDIA’s Blackwell AI servers deliver 28 times higher throughput per GPU at 75 tokens per second, compared to AMD’s MI355X in similar settings. This stark performance divide is attributed to NVIDIA’s ‘co-design’ approach, utilizing a 72-chip configuration and 30TB of fast shared memory, elevating expert parallelism to a new pinnacle. This innovative architecture addresses the typical performance bottlenecks of scaling MoE AI models.

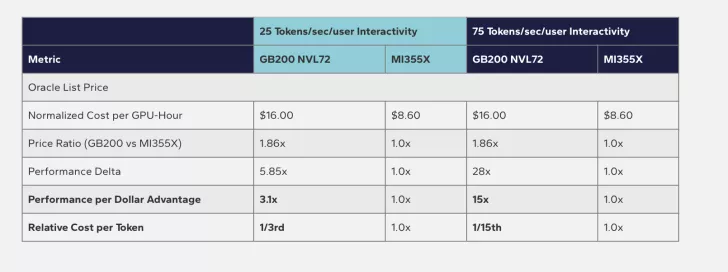

The Cost Efficiency Leader

NVIDIA’s GB200 NVL72 racks are leading not only in performance but also in cost efficiency. According to data from Oracle’s Cloud pricing, these racks offer a significant reduction in cost per token, delivering 1/15th of the relative cost while maintaining higher interactivity rates. This efficiency makes NVIDIA’s hardware immensely popular across the industry, allowing it to stay ahead in various AI applications like inference, prefill, and decode. While AMD’s MI355X Instinct remains a strong contender in dense environments, NVIDIA’s current lead in MoE is undisputed. As competition heats up with future solutions like Helios and Vera Rubin, the industry awaits AMD’s next move.