Foxconn, a major player in the supply chain for NVIDIA, is making waves by securing orders for AI clusters focused on Google’s TPUs. This move signifies a notable transformation for the Taiwanese manufacturer, as it navigates between two tech giants.

Foxconn’s Dual Role in AI Expansion

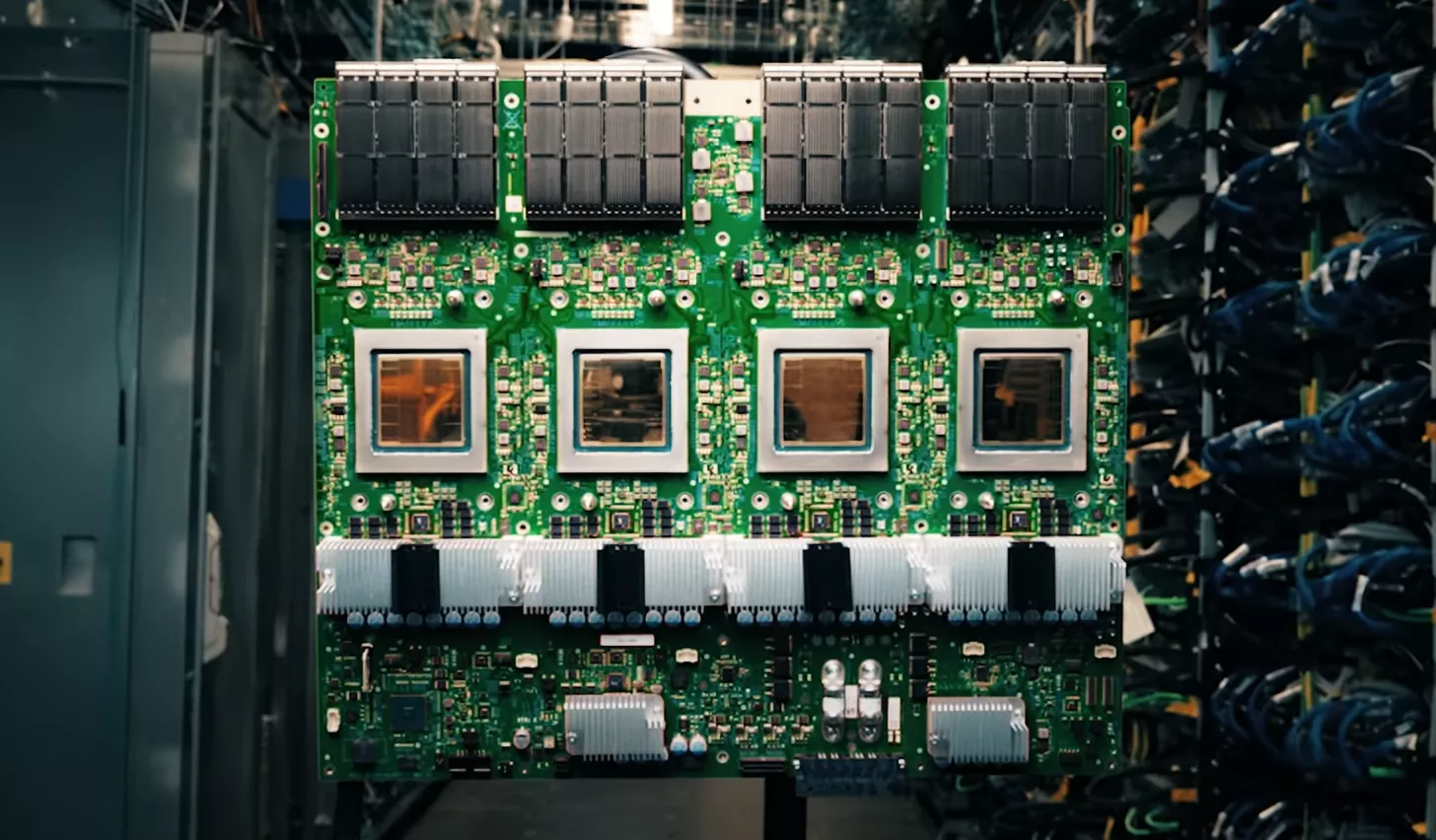

The excitement surrounding ASICs has reached new heights, particularly after the launch of Google’s latest Ironwood TPU platform. Google’s TPUs are gaining traction, reportedly on the brink of adoption by several major companies, including Meta. As TPUs target external adoption, reports suggest Foxconn has been tasked with producing Google’s TPU compute trays and will participate in Google’s ‘Intrinsic’ robotics initiatives.

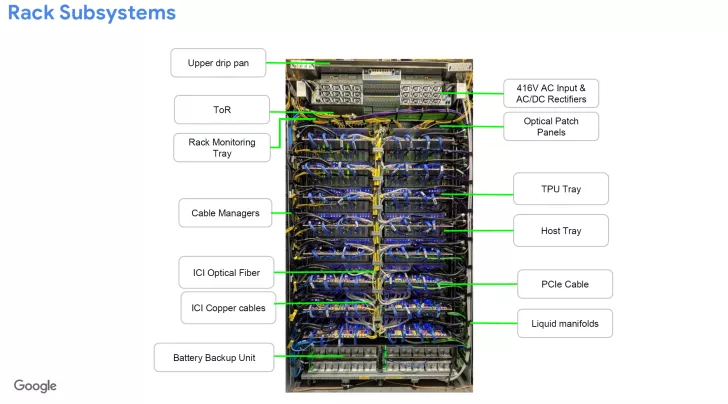

Industry insiders say that Google’s AI servers, built with its self-developed TPUs, are mainly divided into two racks: one rack for TPUs and the other for computing trays. According to Google’s proposed supply chain, for every rack of TPUs shipped, Foxconn will ship one rack of computing trays, resulting in a 1:1 supply ratio.

– Taiwan Economic Daily

Google’s 7th-generation TPUs transcend ordinary chip configurations by featuring scalable ‘rack’ infrastructure, known as the ‘Superpod’. It encompasses 9,216 chips per pod, delivering an impressive 42.5 exaFLOPS in aggregate FP8 compute workloads. Utilizing a 3D Torus layout, these TPUs enable high-density interconnect across numerous chips. Reports suggest that Foxconn will be tasked with producing computing trays to meet the TPU rack orders from Google.

Impact on AI Workloads and Industry Trends

As AI workloads increasingly emphasize inferencing, companies are quickly updating their compute strategies to achieve optimal performance and Total Cost of Ownership (TCO). Google’s TPUs are emerging as a top choice for AI applications, sparking discussions about whether NVIDIA might be challenged by custom silicon from tech giants. The supply chain is experiencing significant interest in Google’s TPU solutions, highlighting a potential shift in the AI landscape.