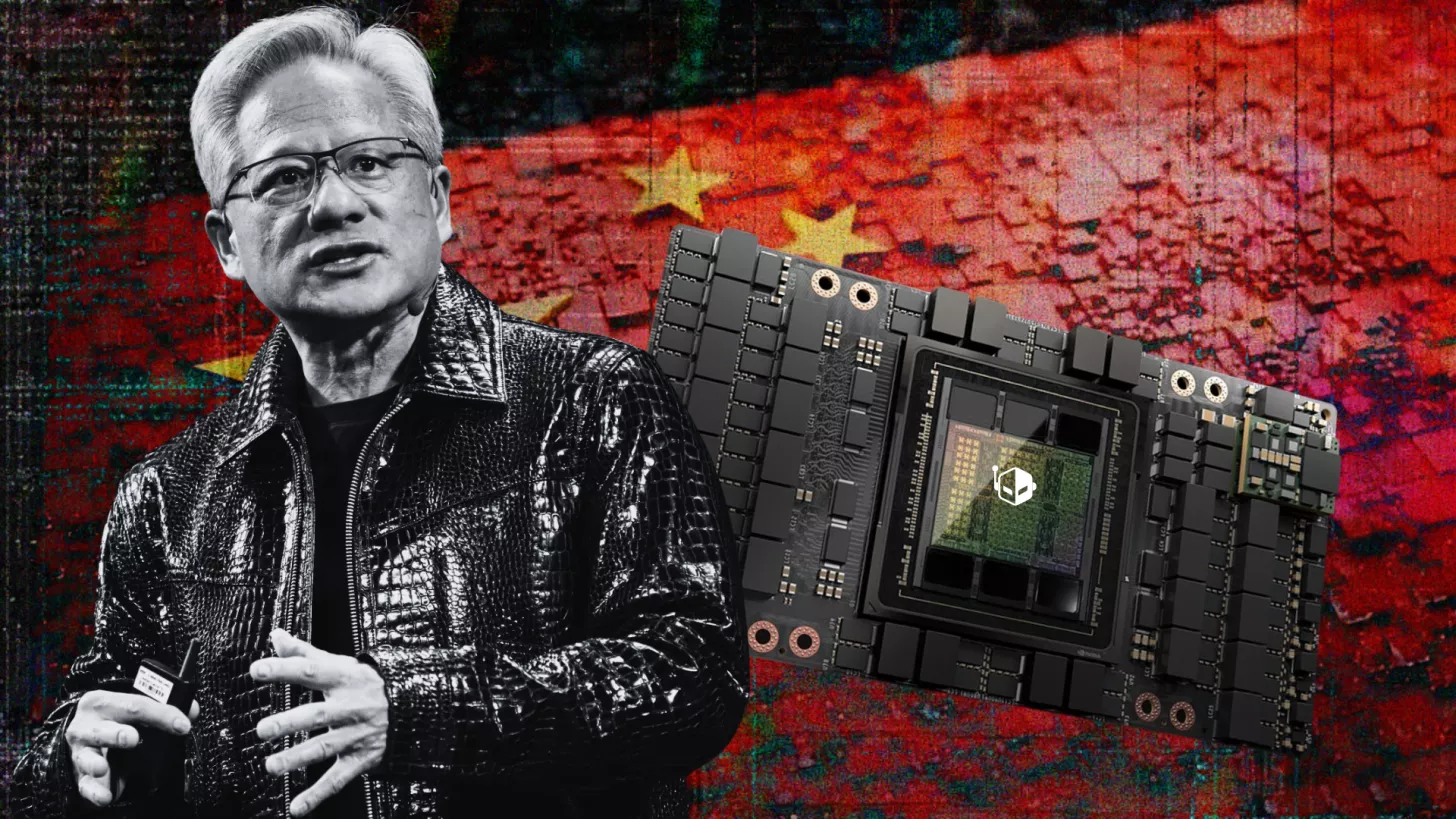

NVIDIA’s strategic move to keep their AI chips competitive has caught the tech world’s attention. Reports suggest that the H200 AI chips, targeted at the Chinese market, will see only a slight price increase compared to their predecessors, the H20. This pricing strategy reflects NVIDIA’s effort to make the H200 chips an irresistible choice, even amidst China’s focus on developing a self-reliant tech infrastructure.

NVIDIA’s Pricing Strategy for the H200 AI Chips

With the lifting of export restrictions by the Trump administration, NVIDIA found itself in a position to offer its H200 AI chips to China once more. However, there was skepticism regarding the potential demand, considering China’s push for technology independence and the introduction of Blackwell as a future option. Nevertheless, industry analysts are reporting that NVIDIA’s pricing of the H200 chips will be hard to ignore, potentially ensuring strong demand.

H200 vs. H20: A Technical Comparison

The H200 AI chip, priced similarly to an H20 configuration, offers significant performance enhancements, boasting over six times the specifications of its predecessor. Reports highlight that the H200’s 8-chip cluster could be priced around $200,000, offering better capabilities at a competitive cost. Here’s a detailed comparison between the two chips:

| Category | NVIDIA H20 | NVIDIA H200 | Estimated Performance Improvement (H200 → H20) |

|---|---|---|---|

| Architecture | Hopper (export-limited variant) | Hopper | — |

| Process Node | TSMC 4N | TSMC 4N | — |

| HBM Type | HBM3 | HBM3E | — |

| HBM Capacity | ~96 GB | 141 GB | ≈ +47% capacity |

| HBM Bandwidth | ~4.0 TB/s | 4.8 TB/s | ≈ +20% bandwidth |

| Intended Use Case | Inference-focused (restricted training) | Full training + inference + HPC | Functional uplift |

| Compute Throughput (FP8 / FP16) | Significantly reduced vs H100 (export-compliant) | Similar to H100-class, full Hopper capability | Substantial uplift, |

| PCIe / SXM Form Factors | PCIe versions only (typically) | PCIe + SXM | — |

| NVLink Support | Restricted / limited | Full NVLink | Major system-level uplift |

| Typical Deployment | China-compliant LLM inference | Global training & inference at scale | — |

Impact on the Chinese Market

Reports indicate that Chinese tech giants like Alibaba, Tencent, and ByteDance are preparing to invest heavily in infrastructure following access to the H200 chips, with planned investments nearing $31 billion. NVIDIA’s move to supply these AI chips by mid-February could significantly impact the Chinese AI landscape. While domestic companies like Huawei are advancing, they struggle to match the capabilities of NVIDIA’s offerings, further cementing NVIDIA’s role in China’s AI ambitions.

The dependence on NVIDIA’s hardware for training advanced AI models underscores the challenges faced by China’s domestic providers. As companies in the region strive to acquire the H200 and MI308 AI chips, the demand for these cutting-edge technologies remains robust.